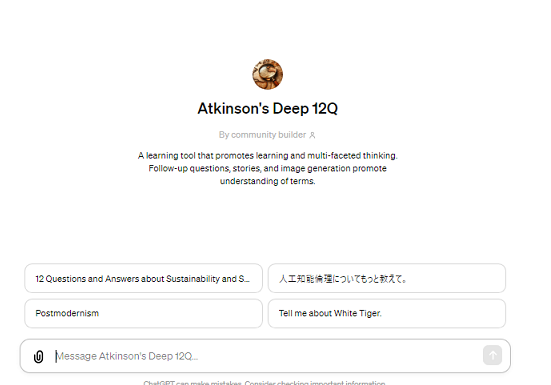

Example of search execution with Atkinson's Deep 12Q (Logistic regression and SVM)

It is easy for Chat GPT plus members to use Atkinson's Deep 12Q, which is registered with GPT.

Here is an example of an exploratory learning case for Logistic regression and SVM.

Open the GPTs screen.

Logistic regression and SVM

Below are the results generated by Atkinson's Deep 12Q.

Notice how it generates 12 questions and answers with just one key word.

The AI also asks follow-up questions to facilitate understanding.

Interesting stories and images can also be generated on request.

(The following is a quote from the chat)

You:

Logistic regression and SVM

Atkinson's Deep 12Q:

Logistic Regression and SVM (Support Vector Machine)

1. What is the origin or cause of logistic regression and SVM?

Logistic regression originated from the desire to model binary outcomes in data, first formalized in the 20th century by statistician David Cox in 1958. SVM was developed later, introduced by Vladimir Vapnik and Alexey Chervonenkis in 1963, primarily designed to perform classification tasks.

2. What caused logistic regression and SVM?

The development of logistic regression was caused by the need for a regression method suitable for binary outcomes, used extensively in fields like biology and economics. SVM was created as part of the effort to develop algorithms that not only classify data but also find the optimal boundary (or hyperplane) that separates classes.

3. What history or records exist about logistic regression and SVM?

Logistic regression and SVM have a rich history documented in statistical and machine learning literature. Logistic regression has evolved through applications in medical statistics and social sciences, while SVM became prominent in the 1990s with the introduction of the kernel trick, which allowed it to handle non-linear classification.

4. What are the characteristics, qualities, or features of logistic regression and SVM?

Logistic regression is characterized by its ability to provide probabilities for outcomes, making it interpretable and useful for risk assessment. SVM is known for its robustness and effectiveness in high-dimensional spaces, particularly because it focuses on the points (support vectors) that are most informative for defining the classifier.

5. What is most associated with logistic regression and SVM? What resembles them?

Logistic regression is often associated with other generalized linear models (GLM). It resembles probit regression, another GLM used for binary classification. SVM is related to other margin-based classification algorithms and resembles linear discriminant analysis in its objective of separating classes by a line or hyperplane.

6. What is the utility value of logistic regression and SVM? How can they be used?

Logistic regression is widely used for predicting the probability of occurrence of an event, particularly useful in medical fields and financial forecasting. SVM is utilized for classification tasks in various domains such as image recognition, bioinformatics, and text categorization due to its effectiveness in handling complex, high-dimensional data.

7. What does logistic regression and SVM prove? What can be inferred?

Logistic regression proves the log odds of outcomes and can be used to infer the significance and impact of predictors on binary outcomes. SVM proves the existence of an optimal separating hyperplane in feature space and can infer the features most relevant for classification through its support vectors.

8. What are the natural consequences of logistic regression and SVM? What happens because of them?

The use of logistic regression often leads to better understanding and prediction of binary outcomes, influencing decision-making in risk management. The use of SVM in classification tasks often results in high accuracy models, especially in complex datasets, influencing advancements in fields requiring precise classification.

9. What is the future or natural end of logistic regression and SVM?

The future of logistic regression and SVM likely involves integration with more complex machine learning models and techniques. They might be extended with artificial intelligence advancements to handle larger datasets more efficiently and with greater predictive accuracy.

10. What does AI think about logistic regression and SVM? What's the general impression?

AI views logistic regression as a fundamental, robust statistical tool ideal for interpretative models. SVM is regarded as a powerful classifier, particularly respected for its theoretical foundation and versatility in handling different types of data.

11. What general information is there about logistic regression and SVM?

General information indicates that both logistic regression and SVM are crucial tools in the machine learning toolkit, each with specific strengths?logistic regression for probability estimation and SVM for optimal classification in complex and varied datasets.

12. What have you heard about logistic regression and SVM? From whom, and when?

Most information about logistic regression and SVM comes from academic sources and machine learning practitioners. Notable mentions are often found in foundational texts like "Pattern Recognition and Machine Learning" by Christopher Bishop and online platforms like Coursera or edX, which frequently update their courses reflecting the latest uses and developments in the field.

Follow-up questions:

How has the use of logistic regression and SVM influenced decision-making in specific industries?

Can logistic regression and SVM be integrated into neural network architectures?

What are the limitations of logistic regression and SVM in modern applications?

Would you like to hear a story based on logistic regression and SVM?

You:

Please give us a story that deepens the above logistic regression and SVM learnings under the title "Failures and Successes of a New Data Scientist".

Atkinson's Deep 12Q:

Failures and Successes of a New Data Scientist

Once in the bustling city of Techville, there was a new data scientist named Alex. Fresh out of university with a degree in data science, Alex was brimming with enthusiasm and theoretical knowledge, eager to solve real-world problems at their first job at InnovateTech, a leading tech company known for its cutting-edge research and products.

The Challenge Begins

Alex's first project involved improving the recommendation system for a popular video streaming platform. The goal was straightforward: use user data to predict whether a viewer would like a particular video. The company had been using logistic regression, and Alex was tasked with exploring whether SVM could enhance the system’s accuracy.

Early Failures

Alex, confident in their academic prowess, jumped straight into implementing a complex SVM model. However, they soon faced the harsh realities of real-world data science. The SVM model, while theoretically superior, did not perform as expected. It was overfitting, too sensitive to the nuances of the training data, and not generalizing well to new, unseen data. Alex felt a wave of frustration?their first major task, and they were already stumbling.

Learning from Mistakes

Determined to not let this setback define their career, Alex revisited their approach. They spent nights reading up on logistic regression’s strengths and SVM's intricacies, understanding that each had its place. Alex learned to appreciate logistic regression for its simplicity and interpretability?qualities that were initially overlooked.

Success Through Integration

With new insights, Alex decided to integrate the predictability of logistic regression with the robustness of SVM. They used logistic regression to handle baseline predictions and identify straightforward cases, while SVM was refined to focus on complex, less obvious patterns. This hybrid model balanced the strengths of both techniques, leading to a significant improvement in the recommendation system's performance.

Presenting the Findings

The true test came when Alex presented their findings to the senior data scientists and stakeholders. Their presentation detailed not just the successful hybrid model, but also the failures and learnings along the way. The audience was particularly impressed with Alex’s transparency and their ability to leverage both logistic regression and SVM effectively.

Recognition and Reflection

The project was a success, and Alex received commendation for their innovative approach and resilience. Reflecting on the journey, Alex realized that the real world required more than just applying algorithms?it demanded an understanding of when and how to use them, a lesson they would carry forward in their burgeoning career.

Epilogue

As Alex continued to grow in their role, they often looked back at their first project as a pivotal moment. It taught them the intricate dance between theory and practice, and the beauty of learning from failure. Their story became a staple for new hires at InnovateTech, a testament to the enduring journey of learning and adaptation in the field of data science.

This story highlights the practical challenges and learning opportunities in data science, particularly in the use of logistic regression and SVM. Would you like to create an iconic image to represent this story?

You:

Please provide imagery that promotes learning.

Here is an image that captures the theme of learning and growth in data science. It features a diverse group of young professionals around a glowing computer screen, in a high-tech office environment. This imagery is designed to inspire and promote the values of collaboration, innovation, and practical learning in the field. Feel free to take a closer look!

You:

Follow-up question.

How has the use of logistic regression and SVM affected decision making in a particular industry?

Can logistic regression and SVM be integrated into neural network architectures?

What are the limitations of logistic regression and SVM in modern applications?

I am also interested in the answers to the follow-up questions above, can you please answer them?

Atkinson's Deep 12Q:

Certainly! Let’s explore the answers to your follow-up questions:

1. How has the use of logistic regression and SVM affected decision making in a particular industry?

In the healthcare industry, logistic regression and SVM have significantly influenced decision-making, particularly in patient diagnosis and treatment planning. Logistic regression is commonly used to predict patient outcomes based on clinical variables, such as the likelihood of developing a specific disease. SVM, on the other hand, is often employed in image classification tasks, such as identifying cancerous tissues from medical imaging. These models help healthcare professionals make informed decisions by providing accurate, data-driven insights, thereby improving patient outcomes and optimizing treatment protocols.

2. Can logistic regression and SVM be integrated into neural network architectures?

Yes, logistic regression and SVM can be integrated into neural network architectures, although in different ways. Logistic regression can be used as the final layer in a neural network to perform binary classification; this is essentially what happens in a basic feedforward network with a sigmoid activation function at the output layer. SVM can also be integrated but is more complex. For instance, the concept of SVM (maximizing the margin between classes) can be used to design loss functions in neural networks, which can help in learning more robust features. This integration combines the strengths of neural networks in feature learning with the robust classification capabilities of SVM.

3. What are the limitations of logistic regression and SVM in modern applications?

The limitations of logistic regression and SVM are becoming apparent as data complexity increases. Logistic regression can struggle with non-linear relationships unless explicitly modeled with transformations or interactions, which can make the model complex and hard to interpret. It also assumes linear separability between classes, which is often not the case in real-world data. SVM, while effective in many high-dimensional problems, can be computationally intensive, especially with large datasets and non-linear kernels. It also doesn’t directly provide probability estimates, which are often needed for decision-making processes. Both models can also be sensitive to the quality of input data and require careful feature selection and preprocessing to perform optimally.

These insights show how logistic regression and SVM contribute to and sometimes limit modern data science applications, particularly as new methods like deep learning provide alternative solutions for complex problems.